Table of Contents

Examining the Ironies and Risks of Artificial Intelligence

The Paradox of AI Professionals Warning About AI

Artificial intelligence (AI) professionals, including the CEOs of prominent companies like Google DeepMind and OpenAI, recently warned about the potential extinction risk posed by AI. This declaration of concern reveals a brutal double irony: first, these professionals and companies are the very creators of this technology, and second, they possess the power to ensure that AI benefits humanity rather than harms it.

The Power and Responsibility of AI Development Companies

As the creators of AI technology, these companies hold significant responsibility for ensuring the ethical and safe development of AI. They have the power to control how AI is implemented and to prevent potential negative consequences. However, to truly address the risks associated with AI, it is crucial that these companies adopt human rights due diligence frameworks.

Generative AI: A Transformational Technology

Generative AI refers to algorithms that can autonomously generate new content like images, text, audio, video, and computer code. These algorithms are trained on real-world datasets, enabling them to create outputs that are often indistinguishable from authentic data. This technology has rapidly advanced in recent years, with tools like ChatGPT attracting over 100 million users in a matter of months, outpacing the growth of popular platforms like TikTok.

Learning from History: The Unforeseen Consequences of Technology

Throughout history, technological advancements have both advanced human rights and caused harm in unforeseen ways. When internet search tools, social media platforms, and mobile technology were first introduced, their potential to become drivers and multipliers of human rights abuses was not fully comprehended. Examples like Meta’s role in the ethnic cleansing of the Rohingya in Myanmar and the use of spyware against journalists highlight the consequences of inadequate consideration of social and political implications in technological advancements.

The Need for a Human Rights-Based Approach to Generative AI

To address the risks associated with generative AI, a human rights-based approach is crucial. Several early steps can guide the development and implementation of such an approach. First, companies developing generative AI tools must adopt a rigorous human rights due diligence framework, as outlined in the UN Guiding Principles on Business and Human Rights. This framework should include proactive identification, transparency, mitigation, and remediation of actual and potential harms.

Engaging with Marginalized Communities

Companies developing generative AI tools must actively engage with academics, civil society actors, and community organizations, particularly those representing marginalized communities. Evidence shows that marginalized communities are most likely to suffer adverse consequences from new technologies. Engaging these communities at the product design and policy development stages can help anticipate and address potential harms and biases.

Stepping Up: The Role of Human Rights Organizations

In the absence of regulatory measures to prevent and mitigate the potential harm caused by generative AI, human rights organizations must take the lead. It is imperative that these organizations deepen their understanding of generative AI tools and proactively engage in research, advocacy, and community outreach. By taking the lead in identifying and responding to harm, human rights organizations can contribute to the responsible development and use of generative AI.

An Urgent Call to Action

It is essential to recognize the transformative power of generative AI and work towards ensuring it benefits all of humanity. Companies developing AI must prioritize the implementation of human rights due diligence frameworks, engaging with marginalized communities from the outset to prevent discriminatory and harmful outcomes. Simultaneously, the human rights community must actively involve itself in understanding and responding to the potential dangers of generative AI.

The Need for Collaboration and Responsibility

Addressing the risks and challenges of AI requires collaboration between technology developers, human rights organizations, governments, and communities. Implementing a human rights-based approach to identifying and mitigating harm is a critical first step in cultivating responsible AI development. Complacency is not an option, nor is cynicism. It is the shared responsibility of all stakeholders to ensure the responsible and beneficial use of this technology.

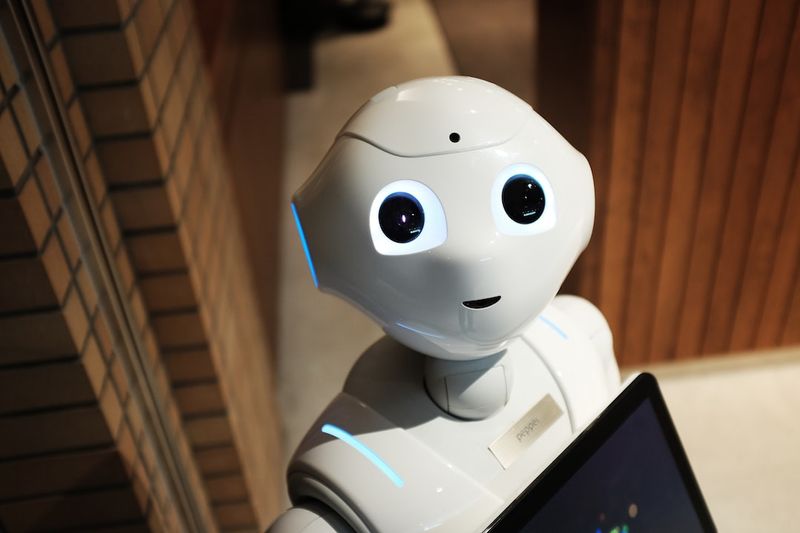

<< photo by Alex Knight >>